Recaptcha Issues Starting November 2012

Wed, 23 Jan 2013 14:08:38 EST

Reoccurring site visitors may have noticed that comments were on and off throughout November and December. I spent most of my holiday vacation dealing

with what I think was a semi-automated attack against Re-captcha that required certain form conditions to facilitate. I still haven't found any information

online that describes the attack I was seeing against this sites comment system. After allowing a cool off period with the changes that I implemented;

I wanted to explain exactly what I was seeing as well as a general description of the changes I made.

This sites comment system allows html links inserted as plain html within comments. Around the beginning of November, comments started appearing within the re-captcha protected comment forms. The comments seemed to have two aims:

1. Add the typical spam links to all sorts of random junk like viruses and impersonation sites.

2. Effect the search engine results with keyword insertion in the comments.

After observing the comments, the first action I took was to start logging the IP addresses or proxy servers posting the comments, re-captcha challenges and responses. They were all different, and from different locales throughout the world. They were being solved using the text input as far as I could tell. The only technology I thought of that could propagate requests from all over the world was a botnet.

I then started looking at my own implementation of re-captcha. I was using the javascript api to generate the captcha, but using the server-side scripts to submit it. I also noticed I was using a very old key from before Google took over, that was not locked to a specific domain. I successfully preformed a man in the middle attack myself against my own site using a few utilities.

The next action I took was to update the server-side and client side javascript libraries from re-captcha and implemented a domain specific key. That didn’t work. As soon as I re-enabled comments in early December, spam links were immediately re-posted.

At this point I did an analysis of all of the comment forms. I was using fixed field names for each form field. From my own experience doing screen scraping on the server side and client side man in the middle DOM manipulation using javascript my hypothesis was that a tool was being used to present my re-captcha images to users around the world who were solving them for whatever reason, and a tool was then posting back to my site. Based on my own knowledge of screen scraping I did a server-side implementation over most of my holiday vacation that I don’t think I could reliably scrape without overly masterful regular expression experience and in-depth analysis of my forms. If you look at the source of this site, the field names are all junk now. I spent a considerable time trying to prevent all the tricks I would use to screen scrape. After unveiling the revised implementation shortly before the new year, spam comments have stopped, and comments are re-enabled.

I have a strong feeling that there are other sites that are experiencing a similar attack, but not writing anything about it. I examined a few popular CMS form implementations in preparation for doing my own revisions. I don't think they could stop this attack. Many sites set a client side cookie before form submission, then validate the data in the cookie on submission. A smart bot programmer could easily implement cookie handling, and do this on a CMS by CMS basis for sites around the globe. One of the other techniques used in combination is to generate random form ID's, which I think CMS store in a database.

After dealing with this attack, and reflecting on my own experience; it really seems like captchas are just a superficial barrier to attack. As far as my explanation above goes, I don't have any clue of the specifics, I am just guessing based on observations and my own knowledge of DOM manipulation, screen scraping, and experience with this attack.

This sites comment system allows html links inserted as plain html within comments. Around the beginning of November, comments started appearing within the re-captcha protected comment forms. The comments seemed to have two aims:

1. Add the typical spam links to all sorts of random junk like viruses and impersonation sites.

2. Effect the search engine results with keyword insertion in the comments.

After observing the comments, the first action I took was to start logging the IP addresses or proxy servers posting the comments, re-captcha challenges and responses. They were all different, and from different locales throughout the world. They were being solved using the text input as far as I could tell. The only technology I thought of that could propagate requests from all over the world was a botnet.

I then started looking at my own implementation of re-captcha. I was using the javascript api to generate the captcha, but using the server-side scripts to submit it. I also noticed I was using a very old key from before Google took over, that was not locked to a specific domain. I successfully preformed a man in the middle attack myself against my own site using a few utilities.

The next action I took was to update the server-side and client side javascript libraries from re-captcha and implemented a domain specific key. That didn’t work. As soon as I re-enabled comments in early December, spam links were immediately re-posted.

At this point I did an analysis of all of the comment forms. I was using fixed field names for each form field. From my own experience doing screen scraping on the server side and client side man in the middle DOM manipulation using javascript my hypothesis was that a tool was being used to present my re-captcha images to users around the world who were solving them for whatever reason, and a tool was then posting back to my site. Based on my own knowledge of screen scraping I did a server-side implementation over most of my holiday vacation that I don’t think I could reliably scrape without overly masterful regular expression experience and in-depth analysis of my forms. If you look at the source of this site, the field names are all junk now. I spent a considerable time trying to prevent all the tricks I would use to screen scrape. After unveiling the revised implementation shortly before the new year, spam comments have stopped, and comments are re-enabled.

I have a strong feeling that there are other sites that are experiencing a similar attack, but not writing anything about it. I examined a few popular CMS form implementations in preparation for doing my own revisions. I don't think they could stop this attack. Many sites set a client side cookie before form submission, then validate the data in the cookie on submission. A smart bot programmer could easily implement cookie handling, and do this on a CMS by CMS basis for sites around the globe. One of the other techniques used in combination is to generate random form ID's, which I think CMS store in a database.

After dealing with this attack, and reflecting on my own experience; it really seems like captchas are just a superficial barrier to attack. As far as my explanation above goes, I don't have any clue of the specifics, I am just guessing based on observations and my own knowledge of DOM manipulation, screen scraping, and experience with this attack.

Charles Palen has been involved in the technology sector for several years. His formal education focused on Enterprise Database Administration. He currently works as the principal software architect and manager at Transcending Digital where he can be hired for your next contract project. Charles is a full stack developer who has been on the front lines of small business and enterprise for over 10 years. Charles current expertise covers the areas of human pose estimation models, diffusion models, agentic workflows, .NET, Java, Python, Node.js, Javascript, HTML, and CSS. Charles created Technogumbo in 2008 as a way to share lessons learned while making original products.

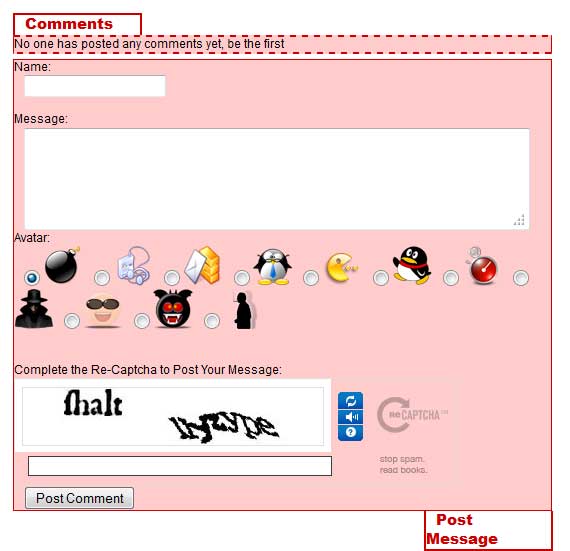

Comments

No one has posted any comments yet, be the first

Comments are currently disabled.