Growing German Chamomile Lifecycle Guide

Tue, 05 Nov 2025 10:00:00 EST

(The following article is comprised of the text transcript from the referenced youtube video.)

Today I'm going to walk you through the steps that I use to grow German chamomile in a container. And I'm going to walk you through the entire life cycle for the year in 2025. First things first, we exist here and grow in USDA Hardiness Zone 6A and 6B. So we're negative 10 to 0 degrees Fahrenheit in the winter. The soils are different everywhere so that makes a big difference as well as you know if you're enjoying gardening.

Starting Indoors: April 2025

Here we are in April 13th 2025. On the left we have four chamomile plants on the right. There's some lavender in the cell tray. You can see there's some powdery mildew in this larger chamomile plant. You can take care of that with simple hydrogen peroxide in a spray bottle. I don't know what other methods other people use but that is probably the most simple and widely available that we use when we're growing inside. Preparing for the summer.Moving Outdoors: Late May

Here is May 24th. This is the first day this got out into a container as a bottom reservoir. And it was kind of late in the season honestly. We didn't achieve 50 to 60 degrees consistently Fahrenheit outdoors until late May of this year. So here it is May 24th 2025. Just a week or so later June 2nd 2025. You can see that rapid growth.First Flowering and Harvesting: Late June

Then about three or four weeks later we finally achieved the flowering on June 29th 2025. So this is when you get to this stage you start picking the flower heads. You can do it by hand. And then what I do is I wash them with a food grade sanitizer. And then what I do I rinse them a lot with water but then the food grade sanitizer. And then I put them in a food dehydrator and take them up past pasteurization temperature around 160 for two hours. And then I bring these back down to around 100 for the remainder of the dry time because they claim that if you overheat these it removes the flavor from them. I mean everything always tastes the best fresh but this holds up pretty well once it is dehydrated.Continued Growth: Summer 2025

Here's another one. Here it is at July 26th. So you can see it just kind of continued to rapidly expand. I stopped really harvesting this and just let it kind of fall on the ground. At this point in time I kind of just let it go because I already had enough for a very long time. It was very low maintenance. No disease it got to this the entire time when it was outside. A lot of flying insects like to come to these so I really wash them and only take the most pristine flowers from them. And they propagate so quickly that you can really be very picky in terms of what you're going to keep.Harvest Results: November 2025

So here's the result. This is on November 4th 2025. You can see this. I had dried them throughout the year. If you use one to two of these that's the amount that is typically in a tea you would buy in the store I would say. So the key is you just let them steep for about 10 minutes in hot water. And they are like I said you only need one to two of them.And here it is. Check this out. This is the plant itself on November 4th. That's today 2025. And so it has receded itself kind of late in the season. I stopped picking any of the flowers. You can see it actually has a few on there still amazingly. We've had a few freezing temperatures at night. We're getting into zero degrees Celsius or 32 degrees. We're getting below that probably twice a week now. And I will probably cover this and keep the water off it during the winter. That I don't think this plan is expected to survive over the winter, especially out of the ground because its roots will get very cold. But I'll put it up somewhere warm and try and maybe cover the top. And maybe it'll survive for next season.

Key Takeaways

So I hope this has been helpful. And I would greatly encourage you to check out this plant on your own. It's very easy to grow. I had read that when I was doing my research that it was very easy to grow. But I didn't believe it. But it really is. And it's a fun plant to have around. It smells great in your garden. And it's great for tea and any other culinary experiments you want to try with it. So good luck with this plan. And I hope you found this helpful.Principle of Production Purpose Submissions

Tue, 23 Sep 2025 10:00:00 EST

Steven Oxley recently authored an article regarding the concept of production purpose. In broad terms he was referring to careful scope planning for software projects as it relates to how that effort will impact production and derived value. I submitted responses to the questions.

Question 1: What is a principle that you've (re-)discovered during your work?

Q (Steven): What is a principle that you've (re-)discovered during your work? Bonus points if it's related to DevOps or Platform Engineering.A (Charles): I agree 100% with production purpose. I deal with a lot of full stack deliveries. Customers are often not very interested in back end. It must just work 100% of the time, scale, be secure, cost effective, etc and that's it. I suppose the production purpose incorporates those objectives. On the front end yes, you get a lot of customer feedback on visuals and functionality. I've continuously re-discovered that tools make great developers better (like Terraform you mention). It has always been the tools as long as you have a developer that is good at problem solving. There are many great developers that can figure out any given problem with enough time, but tools will accelerate this process. There are so many tools emerging right now that the time to continuously evaluate and incorporate can be a challenge. Terraform is hot now, but will agents be able to write and maintain the terraform configurations for us soon?

Question 2: How have you noticed the principle of production purpose crop up in your work?

Q (Steven): How have you noticed the principle of production purpose crop up in your work?A (Charles): The teams I work with often have to incorporate direct customer feedback. This goes straight to production purpose; you are being told what is needed for production. However, original projects always have situations where our teams will figure out an issue during iteration cycles that may be a future issue, so we build in advance of the customer feedback. We do this in cases where the issue is going to be an open and closed issue. These types of situations are mitigated by advance customer approval of features and designs, but they still crop up on complex projects.

Question 3: What are problems you've had with your production systems?

Q (Steven): What are problems you've had with your production systems that you've struggled to get other people to care about? If you have some, maybe you could try using the principle of production purpose to overcome some of the friction you're experiencing. If you do, please let me know how it goes.A (Charles): When architecting solutions I always try to consider production throughput vs cost. It's easier to build for speed ignoring cost. Long term cost for many companies is often not a concern when building a new product as time to market is usually the priority. In the back of my mind I know that if I don't build for cost effectiveness and the company experiences hardship, it may mean turbulence. Wouldn't it be better if possible to seriously incorporate cost into architecture planning and mitigating any potential bumps? That's such an arbitrary thought process, and not directly related to developer responsibilities and rewards that it is ignored on many project teams. It is only after a cost saving initiative is launched that this kind of thinking can be rewarded usually.

AWS LAMP Stack With PHP 8 on Ubuntu and Terraform

Wed, 15 Feb 2023 11:56:58 EST

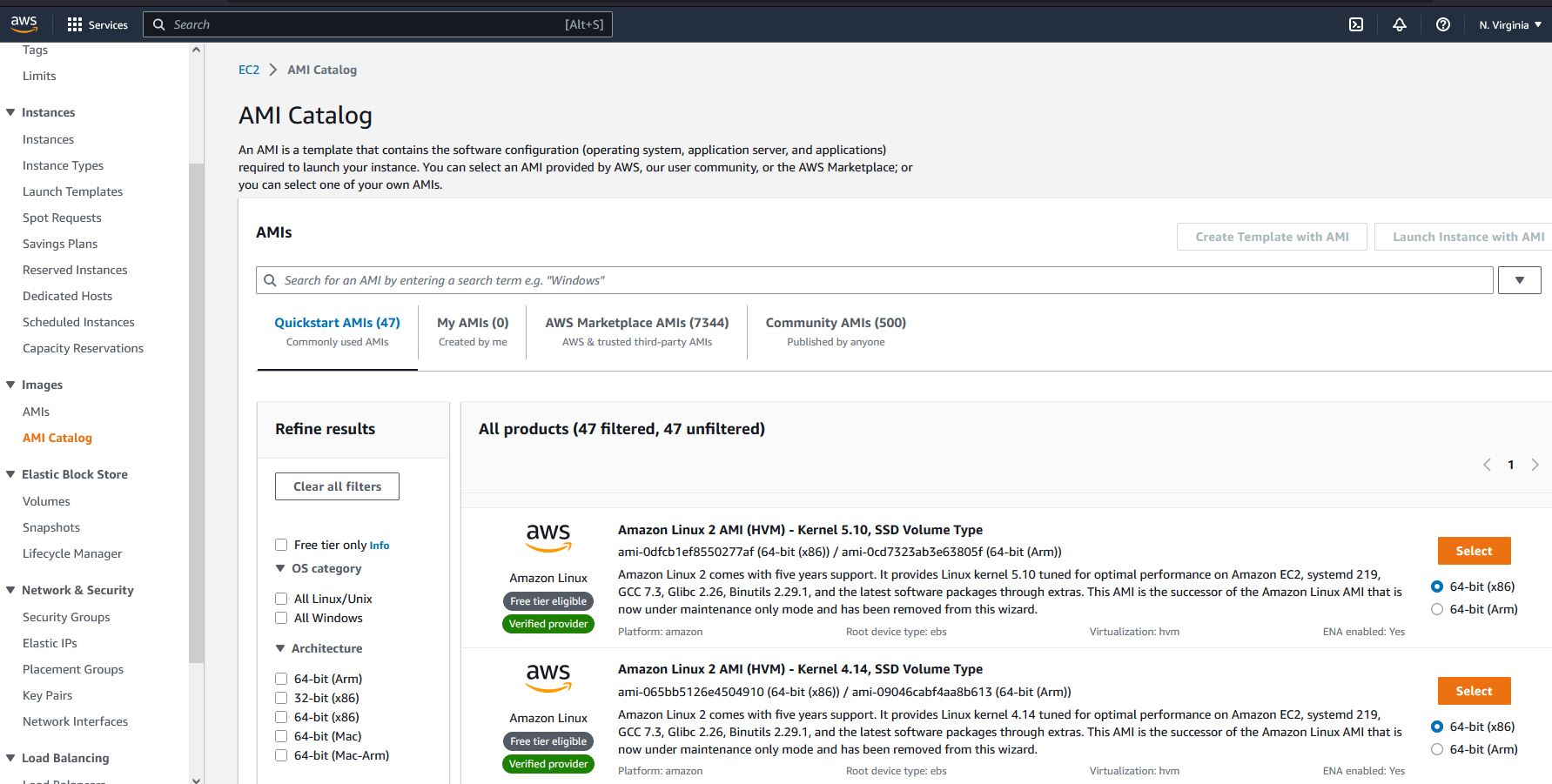

I have a need every now and then to quickly create a Linux, Apache, Mysql(MariaDB), and PHP stack within Amazon Web Services (AWS). Terraform is a perfect tool for this as it takes roughly a thirty minute task to a few minutes. Once you become familiar with Terraform, it is easy to see why the tool is being deployed in major companies across the world to help manage complex infrastructure.

The major component needed with Terraform to go beyond just launching a default unpatched and unsetup virtual machine is the addition of a tailored initialization script that is run via the user_data Terraform property. Below you will find my initialization shell script that I use with Terraform to get Ubuntu setup as a PHP 8 LAMP stack as of February 2023. If you are just starting out with Terraform you will likely need to refer to other information to get started with the basics.

A few important notes:

- AMI ID’s are region specific. The AMI ID I am providing here will only work in us-east-1 (Virginia).

- Terraform will apply pretty sensible defaults. I already had subnets and security groups setup, so I explicitly provide those. I also specify to destroy the root_block_device on termination and only create a 10gig volume.

- I use AWS access keys in the variables.tf file. For my particular setup environment variables as seen in many tutorials do not make sense for this.

- I have a key specified in my AWS account named “Miner_Sample”. That key is specified in the Terraform file so it can apply the key for access to all of my AMI instances using SSH.

- I am using Terraform 1.3.6 on Windows 10. I have the Terraform AWS provider as well as the Windows Amazon CLI installed.

- When you run Terraform apply, it will take several minutes to execute all of these commands.

- These images constantly change. If you run into trouble you may need to ssh into the VM to see what went wrong.

Here is the bash initialization script. In this example it is named init_lamp_ubuntu_22.sh

#!/bin/bash

# First off we need swap space for memory. These come with no swap

# and I do not care how slow they get.

# In most cases you want no swap - and using a docker container or cluster

# etc that makes sense but not here

sudo fallocate -l 1G /swapfile

sudo chmod 600 /swapfile

sudo mkswap /swapfile

sudo swapon /swapfile

# Backup fstab

sudo cp /etc/fstab /etc/fstab.bak

# Make it permanent even though our VM probably wont be

echo '/swapfile none swap sw 0 0' | sudo tee -a /etc/fstab

# Default swappiness is 60 which is fine

# Update any packages - Debian which Ubuntu is based on will present prompts in the UI. We need to disable these.

# This is the reason for the DEBIAN_FRONTEND commands.

sudo DEBIAN_FRONTEND=noninteractive apt update -y

# update any package listings - this requires interactive screen input

sudo DEBIAN_FRONTEND=noninteractive apt upgrade -y

# install apache

sudo DEBIAN_FRONTEND=noninteractive apt install apache2 -y

# mysql

sudo DEBIAN_FRONTEND=noninteractive apt install mysql-server -y

# php

sudo DEBIAN_FRONTEND=noninteractive apt install php libapache2-mod-php php-mysql -y

# Create a phpinfo file - you should delete this right away after ensuring it renders php

sudo echo "<?php phpinfo(); ?>" > /var/www/html/phpinfo.php

Here is the main.tf file

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 4.16"

}

}

required_version = ">= 1.2.0"

}

provider "aws" {

profile = "default"

region = "us-east-1"

access_key = var.aws_access_key

secret_key = var.aws_secret_key

}

resource "aws_instance" "app_server" {

ami = "ami-0574da719dca65348"

instance_type = "t3.nano"

subnet_id = ""

vpc_security_group_ids = []

key_name = "ENTER YOUR KEY NAME HERE"

tags = {

Name = var.instance_name

}

user_data = "${file("init_lamp_ubuntu_22.sh")}"

root_block_device {

delete_on_termination = "true"

volume_size = "10"

}

}

Here is the variables.tf file

variable "instance_name" {

description = "Value of the Name tag for the EC2 instance"

type = string

default = "Ubuntu_LAMP"

}

variable "aws_access_key" {

type = string

description = "AWS access key"

default = ""

}

variable "aws_secret_key" {

type = string

description = "AWS secret key"

default = ""

}

Finally, here is the outputs.tf file

output "instance_id" {

description = "ID of the EC2 instance"

value = aws_instance.app_server.id

}

output "instance_public_ip" {

description = "Public IP address of the EC2 instance"

value = aws_instance.app_server.public_ip

}

I hope this information can help you get a basic setup running. If you are not already familiar with Docker, I would suggest digging into creating your own Docker images. Combining Docker with Terraform instance creation like this really invalidates the need for OS specific setup scripts beyond the basics like the one provided here.